Usability testing, with the aid of user-centric interaction designing helps us to evaluate the impact of your products, on users. A vital tool in the evaluation and monitoring of product performance, Usability Testing is a function that we execute with unerring accuracy because we have mastered the realm of user perception analysis and its relevance to product design.

Usability testing is a way to see how easy to use something is by testing it with real users.

Users are asked to complete tasks, typically while they are being observed by a researcher, to see where they encounter problems and experience confusion. If more people encounter similar problems, recommendations will be made to overcome these usability issues.

Usability testing is a method used to evaluate how easy a website is to use. The tests take place with real users to measure how 'usable' or 'intuitive' a website is and how easy it is for users to reach their goals.

The key difference between usability testing and traditional testing (bug testing, acceptance testing etc.) is that usability testing takes place with actual users or customers of the product. Whilst traditional testing might be undertaken by a developer, designer or project manager, usability testing removes any bias by collecting feedback direct from the end user.

There are a few different types of usability testing or reasons to conduct usability research:

Used to compare the usability of one website with another. Comparative tests are commonly used to compare a website against peer or competitor sites, however it can also be used to compare two designs to establish which provides the best user experience.

Think of card sorting as a way to efficiently determine how elements of your website should be organized. You'll develop a site hierarchy and navigation that meets your users' specific needs. Take the jumbled card graphic above. Each card has information on the reverse side: product descriptions, for instance, or support pages, informational content, and so on.

You ask users to categorize the content. You want rows and columns. Columns might represent page categories, for instance, while rows show importance. You can do this with people in person or virtually through an online tool. Either way, you want real users' feedback.

In moderated user testing, someone moderates the testing experience. It could be an individual in a room with participants or a moderator in a discussion group.Whatever the case, moderators can answer questions from participants, help guide the process, and provide insight into what the website wants to achieve.

Moderated user testing is also useful when you want someone to explain how a product or interface works. If confusion exists, there's probably an issue with UX. A moderator can report back on what he or she witnessed during the test.

Many experts believe that unmoderated user testing is more effective. After all, won't people feel more comfortable sharing their actual feelings when nobody's breathing down their necks?

This is true to a certain extent, but you also have to leave room for user error and unfocused participant behavior. Running both moderated and unmoderated user experience testing can make the results far more accurate.

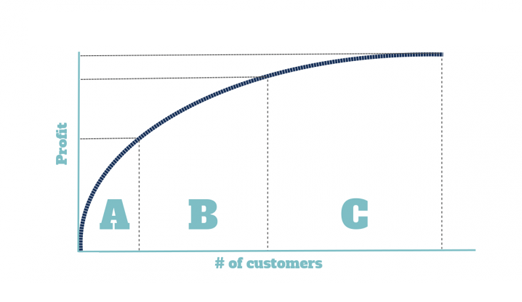

For instance, fewer than 5 percent of dissatisfied customers actually contact the company to complain. This means that 96 percent simply suffer in silence, and according to one study, 90 percent will never return to that business.

Learning how to listen to your customers can become an excellent exercise in user experience testing. Sources of information might include:

You don't have to wait for users to contact you. Consider setting up a poll or survey to ask people for their opinions directly.You can do this on your blog, homepage, or anywhere else you like. If you have a large social following, consider asking users to weigh in on Facebook, Twitter, or Instagram.

They can help you improve your business, gain a better understanding of your market and the competition, and even bring you referrals. If you already talk to your customers, great. That's smart. Good questions will get you good information. However, for information that will get you an advantage in the marketplace, you need better questions.

When your brand appears, sounds, or behaves differently from one page of your website to the next, you risk confusing — or worse, alienating — your target audience. It's kind of like your favorite comedian. You're used to his specific brand of jokes.

Then you buy tickets to his latest show, and you're flabbergasted. He sounds nothing like himself. And you might never buy tickets to one of his shows again. Why? You wouldn't know what to expect because his credibility has been shattered.

I run lots of A/B tests. If you asked me how many I've conducted over my career, I couldn't tell you. And I still stand by them. There's no easier way to compare two versions of a marketing asset, collect results from your target audience, and identify a clear winner.

You change one element on the page or in the email — or whatever you're testing — and you push both versions live. At the end, you know which one performed better.

It's like the two book covers I showed you above. I could make the test a little more practical by changing nothing more than the color.